Get ready for a captivating conversation about AI and its impact on the WordPress community, as James LePage, the founder of CodeWP, recently appeared on the Torque Social Hour for an in-depth interview. The content of this interview offers insights into the integration of AI into the WordPress ecosystem and its potential to revolutionize website development.

In this video, James discusses the CodeWP platform and its use of AI to create WordPress Code Snippets, as well as the workings of general artificial intelligence and large language models created by OpenAI. The discussion also highlights the importance of helpful tools and resources in the AI industry and their impact on the development process.

Additionally, the panel explores the potential benefits and opportunities that come with incorporating AI into the WordPress community. It provides a glimpse into the future of the WordPress ecosystem and how AI will continue to shape and evolve it.

Video Transcript

This video transcript was generated by AI.

[00:00:01.850] - Doc Pop

Hey, welcome to the Torque Social Hour, a weekly live stream of WordPress news and events. My name is Doc and I'm a contributor over on Torquemag IO. Great source for tutorials and interviews like this. So if you enjoyed this, visit torquemag IO. The discussion of AI is everywhere these days. And I know that on the Torque Social Hour for the last two of the last three episodes, I think AI has been sort of our main topic in terms of AI and WordPress. Of course, this is a WordPress show, so we are going to try to keep this very specific to AI and WordPress. But all the hype around AI goes far beyond WordPress. On Monday, Microsoft announced that AIpowered answers would accompany top results in their search engine Bing. And shortly after, Google did the same thing, talking about Bard, which is going to be their form of AI answers in your search results. Not just in search though. We're seeing AIpowered image synthesizers like Dolly and Staple diffusion that were huge last year. And everyone's, I'm sure, still kind of like they're still huge. But we've also seen the rise of chat tools like Chat GBT.

[00:01:17.710] - Doc Pop

These tools use AI and they can generate poems, they can write book reports, they can even write lines of code. And I think all of this brings us to actually something that just happened today. Bringing it back to WordPress. Jetpack. The WordPress plugin just launched quietly two experimental new features that use AI. One to do image generation similar to stable diffusion in your WordPress post editor, just as a gutenberg block. The other that reads your current post and generates another paragraph or whatever you need based on what you've written so far. So it's everywhere. AI is definitely everywhere and it is in WordPress. And so that's what we're going to talk about this week on the Torque Social Hour. I've got Anthony Burchell, a software developer at WP Engine. Anthony, how are you doing today?

[00:02:15.310] - Anthony Burchell

Doing well, I promise. All of my responses are human generated. Human generated.

[00:02:20.590] - Doc Pop

I always have that temptation to be like, oh, wouldn't it be clever if I wrote this intro? I do want to tell people I wrote that intro myself. I have used AI, though. But we are going to also be talking to James LePage, the founder of Code WP, AI, a tool that uses AI to generate WordPress plugins for kind of no code solutions. James, how are you doing today?

[00:02:47.350] - James LePage

I'm doing very well. Thank you guys for having me. I really appreciate being able to be a part of the conversation.

[00:02:53.670] - Doc Pop

Well, it's extra nice for me because I feel like I said two of the last three episodes, we definitely talked about AI in the livestream and Code WP, you know, kept coming up. So it's nice to like, actually be able to like instead of just like, guessing about what's happening to be able to talk to you and, and ask you questions about it. Let's start off, I know that you are I think you're a web developer as well, and then kind of got into software, but I might have it backwards. You might have been deep into software and got into web development. Why don't you tell us your history of how you got into WordPress and also your history of getting into AI as well.

[00:03:31.730] - James LePage

Yeah, definitely. So I've been involved in WordPress and the general community for around eight years now. When I was really young, I started doing WordPress websites just on my own, developing random little sites, and it eventually grew into creating sites for the local businesses in my neighborhood. And it really just continued to grow over the years to creating websites for larger and larger companies. Building an agency, kind of falling into the agency in that I had so much work, I needed more developers, I needed designers. So I created the Isotropic agency. I've been running that for around five years now, and that also scaled over time as well, where we went from smaller projects to pretty major projects really involved in WordPress. We only did WordPress and then eventually WooCommerce websites and that kind of grew. And then I decided to scale it back down as I was finishing up my college degree in entrepreneurship and real estate. But as that was happening, I was also kind of learning more about actual development and PHP and JavaScript and how to create these functionalities that my developers were previously creating for me, and also fell into the rabbit hole of AI as it was kind of becoming more mainstream, leaving the academic space and entering into industry.

[00:05:01.400] - James LePage

So that's kind of the development side of it. I've also been really involved in content creation, specifically in the community for a page builder called Oxygen, but also in general WordPress as a whole, creating a lot of content on my blog, Isotropic Co, which some of you may have stumbled across just because it ranks high for a lot of keywords that WordPress creators look up. And I've also done video tutorials and just a lot of stuff in the general industry talking about WordPress. So that's kind of my background and my general history doing it.

[00:05:41.950] - Doc Pop

I'm going to drop a link to the Isotropic blog. We've got this here article that I'm going to link to. Should WordPress creators use chat GPT for everyday tasks? And that definitely brings us into what we're going to be talking about this episode, which is code WP. Well, I mean, is Kodwp Chat GPT powered?

[00:06:05.350] - James LePage

No. So the way I got into AI was I was interested in it. I was running the agency, we were doing a lot of custom development. We were doing a lot of development that were code snippets specific to WooCommerce, extending these little functionalities based on the client requests at the time. So I started kind of playing around with the idea of just using AI to augment the workflow. We had a lot of Snippets, we had a lot of developers, we had a lot of needs and requirements in the agency. So it was an internal tool. And I started also sharing kind of, here's what I'm working on, here's what I'm interested in with the Isotropic community, specifically through our Facebook group. And there was a lot of interest in using it themselves and how can I do it myself and how can I kind of code and develop and understand this general industry? So my community and myself kind of grew our interest in understanding and AI together and that eventually kind of just grew into Co. It was a tool that I made in a weekend using WordPress and ACF and all of these plugins to kind of make it accessible to the community.

[00:07:23.870] - James LePage

Here's what I'm working on, here's how it works. By the way, it's cool. I built this with WordPress and oxygen. So that tool was just meant for the community and it started growing really quickly because AI is definitely a trend and people are super interested in it. But I think it also offers utility to a lot of WordPress creators. So that's how we kind of got into code. WP. And then to get back to the original question of what does it actually use, it originally used OpenAI's Codex, which is an API that points towards a large language model intended to create code. But as we kind of grew the platform, codex wasn't really satisfying our needs. And there were a lot of questions around kind of where it got its data from and how it was creating the data, and a lot of different questions that came from the community. And we eventually kind of worked backwards and started using GPT-3, but also additional third party providers like Coke Gear to create these code Snippets based on language prompts. So we're not using Chat TPT. There's actually no publicly available API for it right now.

[00:08:47.190] - James LePage

Other apps are using it by, I believe, creating a headless Chrome instance and then injecting the content into it and then pulling it back out. And eventually there will be an API. There's a waitlist. And it is a different model than GPT-3, but for us three and the Coher models doing very well based on our implementation. And I'd love to kind of talk about what goes on between I want this Snippet and how the Snippet is created and then how it's intended to be used as well.

[00:09:22.290] - Doc Pop

I want to ask you, you mentioned Oxygen. Is that oxygen? AI.

[00:09:26.310] - James LePage

No, Oxygen is. I think if you were to look it up on Google, you'd be looking up Oxygen Builder. It's builder that's more intended towards, I'd say, more advanced users of WordPress. I got into it years and years ago because we were looking for a solution that our clients could use, but also that gave us the capabilities to kind of create these custom elements and incorporate custom code in an easy way while not having to do themes. Custom themes.

[00:10:00.570] - Doc Pop

I kind of just grew with the product and embedded myself in that community as well as the communities around kind of optimizing websites for speed. And those were my first kind of forays into the WordPress community as a whole. I'd always read Torque magazine and kind of been a part of that aspect of WordPress, but never really gave back to it in the form of interacting with these groups. So Oxygen was the first main page builder and group that I kind of embedded myself in and from that I also realized there's a great opportunity to kind of carve out my niche in terms of content creation. There are a lot of questions that I can answer and started writing articles on the Isotropic blog and doing videos which, which the blog itself, it's not really where, where it used to be, but it became very popular and garnered a lot of page views from it. But that's kind of what I've been a part of for the past three years and a lot of my community started off with me from Oxygen and kind of grew into the overall WordPress and AI and just exploration in terms of the most modern trends all related back to WordPress in Facebook and through the blog content.

[00:11:24.490] - James LePage

I have to say, I'm surprised that you're using GPT-3, that is Open AI project that I think GPT-3 came out in 2020 and Chat GPT feels light years ahead. I mean, if you compare the two now, they seem like radical product.

[00:11:42.480] - Anthony Burchell

They're based on the same like the DA Vinci model is what Chat GPT is using, I believe. So they're pretty similar, aren't they?

[00:11:51.330] - Doc Pop

Yeah. So the way Chat GPT works is it took the original GPT-3 model and then it trained it to be specific to that chatbot's implementation. And the first thing they did was create a new model called Instruct GPT and that has more information about it. The way GPT works is kind of sparse. We really only have an Open AI blog post to go off, at least I believe maybe it's changed and more information has been released since then. But we have a lot of information on kind of how they trained it and how it works. They took three, which is really good at all different types of tasks, simply because it's a humongous model that has a lot of use cases baked into it. And then what they did is they created a lot of prompts and answers and said, rank these answers based on how good they are. And they said that to a human. They said, read these responses and see which ones are good. And they ranked, oh, this response is good, this response is good, this response is good. And from that they were able to kind of tailor the model to answer in a Chatbot like way.

[00:13:07.370] - Doc Pop

The question that was being asked, and I believe it's called like a reinforcement learning model or training model. And really all that means reinforcement learning with human feedback. And really all that means is, as it suggests, humans rank the generations. And from that you can create a more conversational and instructional model. So it's really based off all the same infrastructure. They never retrained GPT, so they went from two and then they went to three, which was a retraining of similar concepts with additional data and parameters. And eventually four is coming with similar concepts with additional data and parameters. But in the midterm they took that, they added additional more up to date information and then also trained it to be specific to that use case.

[00:13:59.630] - Anthony Burchell

Yeah, and actually, Doc, this is kind of similar to what we talked about last week on the show, where.

[00:14:07.630] - Doc Pop

For.

[00:14:08.030] - Anthony Burchell

Context, I have a plugin where I added these NPCs that can be primed with personality data. And based on that personality data, when you talk with them, they will take that into account and respond to you with what they know. Chat GPT is similar, but it's very pointed and very mission focused. But it's essentially the same thing as we were talking about last week, where one of the personalities of my agents is that it's a highly advanced AI robot assistant program to excel in technical tasks. Though dedicated to their duties, they have a passion for music and entertainment. And you start building this personality so that when it responds to you, it knows that I'm into music, I like these things, but also I'm really technical and I can respond in this way. So Chat GBT is just like one implementation. I think they famously said that they built it just because they were shocked that no one else had already built it because it was just so easy.

[00:15:05.090] - Doc Pop

Yeah, I believe they built it. I don't want to say weekend, but like, really quick record time, because they were like, this doesn't exist. And this is a perfect way to allow people to interface with this AI. And if I make it free, it gives me a lot of training data. Like, if you go on Chat CBT and you create a generation and it's good, you can give it a thumbs up and it's not just a fun button to press. They're using that data to then retrain the model, which is kind of what we do in cowp as well. And if you thumbs it down in Chat GBT, it'll be used to discourage that behavior. And I'm not exactly sure how it works because there's not too much information out there, but I'm assuming they're kind of using their user base as that reinforcement learning, human feedback loop. So I think that's really interesting in why they released it. Number one, to show the world what it could do. And the Chatbot was the perfect interface to do that. And number two, they're 100% using the behavior of the users, what the prompts are, what industries the prompts fall into to create a better model now, but maybe also create industry specific models or there's a lot of things to kind of look at when you're looking at what they released and why they released it.

[00:16:31.870] - James LePage

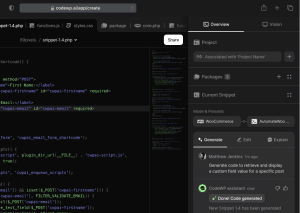

So let's talk about Code WP, an AI code generator for WordPress creators. The idea is that anyone can use this tool to create anything for WordPress plugins is the first thing that comes to mind. But you've got several other examples here. James, I want to hear about a cool success story. What's the coolest plug in that you've heard someone create? I mean, it could be something you created using Code WP.

[00:17:03.510] - Doc Pop

Yeah, so I think the first thing with Kodwp was that when we first launched it, it was like, all right, let's make this thing you put in, I want the WooCommerce button to be blue now, and we will spit out a zip file that you upload to the site. And that was kind of our original premise when we went into commercializing a product, but it turned out, number one, people didn't really want that. And number two, with the current infrastructure we have, it's not easy to do and be right all the time, and it's important to be right all the time and not introduce massive issues to user websites. So the site right now, the closest it will get you to a plug in is you can export a code snippet as a JSON file, which can then be imported into code snippets to the plugin. So it's not creating plugins. Instead it's creating snippets. And in most situations, it's creating just multiple functions that work together to fulfill the goal of what your prompt is. I think eventually we may get to creating plugins, but it's difficult to do that. I don't know if the proper word is a responsible way, but it's difficult to do that.

[00:18:18.510] - Doc Pop

So right now, and I think into the near and maybe far future, creating snippets that introduce functionality into a website is the goal of what we're doing, not just because it's the easiest thing to do, but I think it's also the most user friendly WordPress implementation of what our platform could be. Now, in terms of the cool things that we've created, there's a lot, I mean, there's a lot of things. And I think the thing that resonates with my community the most is looking at paid WordPress plugins or WooCommerce plugins. So we went on Woocommerce.org and we asked some of the users to kind of point at these plugins that they wanted to see functionality from, and.

[00:19:07.790] - Anthony Burchell

You.

[00:19:08.130] - Doc Pop

Still have to pay for them, but they're not the most expensive ones out there. And they introduce this small functionality like minimum, maximum limits for variable products, for example, or send a slack notification every time a product is purchased or we hit 100 users or something like that. And I think those type of snippets are the coolest example of what the AI can do because of kind of how we've built the platform. But I also think that at least for me, the coolest usage of the platform is when I need to go and create a custom post type. We have a firefox extension. I'll open the firefox extension in dev tools, I'll say register this post type called Cars, and it will create the post type, and it will also create all of the labels for that post type. I don't need to go and think about what labels it's going to be. So I think it's more like those simple admin tasks for me as a developer who creates WordPress websites that's super helpful, and also just using my own product to influence my workflow is number one great for me and really rewarding for me, but it also really dictates the direction of the product too.

[00:20:29.310] - Doc Pop

Me and the developers at Isotropic are like, what do we want, what features do we need to add? So that's why we added the code snippet export, that's why we built the Firefox extension, because that's what we wanted and that's what would help us create. So I hope that answers the question of what's the coolest thing you've created? I've seen a lot of people sharing snippets with really cool chart JS implementations of show me a breakdown chart and make a dashboard widget of it. So, I mean, the use cases are really endless and it's really cool to see what people share in the community, what they've created.

[00:21:14.670] - James LePage

Well, that definitely tells me that, yeah, I've been thinking about this wrong. I think maybe the early launch talks more about plugins, but it sounds like rather than exporting zip files that you would kind of import as a plug in, you're talking about custom PHP snippets that people would add, like non coders would add to functions PHP, something like that.

[00:21:37.130] - Anthony Burchell

Is that through a plug in? You said it was a snippet plugin, right? Yeah. So you import the.

[00:21:44.210] - Doc Pop

Behavior of I think the user flow was very influenced based on Oxygen, and I'm not really sure if this is as widespread in other communities. Oxygen replaces the WordPress theme, so when we switched to Oxygen, we did not have access to functions PHP. So to get around that, we used code snippet management plugins, and code snippets is probably the biggest free one that a lot of people know about, but there are also premium snippet management plugins that are just very easy to use and kind of allows you to visually see what snippets are present on your website. And the community used that, they either did that or they built custom plugins. And because of that, we had a lot of snippets. We shared a lot of snippets. And that kind of flow of all right, let me code something to add this little functionality. Instead of doing an entire plug in, translated over to our work and a lot of people in oxygen and in turn in the Isotropic community and then in turn into the code WP community.

[00:23:03.690] - James LePage

You mentioned that you were concerned about the PHP. I'm sorry? The plugins. If you were doing those that they might not work as well and are snippets more reliable, like less likely to break. Is that part of the issue?

[00:23:21.470] - Doc Pop

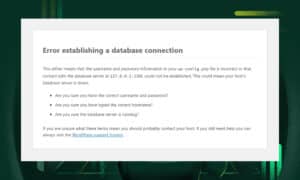

So from a technical side of things, just creating the plug in itself for most of the requests that we got was, I think, with the current infrastructure possible by pushing it. And what I mean by that is, if I'm creating a plug in, I'm probably going to need some custom CSS and JavaScript and PHP. And there's so many moving parts to creating a plugin that can be installed in a website. It's tough to actually create it, and then it's also expensive. It's very expensive to even do the snippets that we're doing now because it's not computationally expensive. Yes. And I can go into what we do to get to a snippet. But it's expensive to make these snippets. In general, the requests that we're getting are smaller. Requests that don't need to be fulfilled by a snippet. I'd say so. I think the example is, like, I want to hide the Add to Cart button and WooCommerce on this page and this page and this page and this post type. You don't need a plug in to do that. You need a snippet. And even if you're not using oxygen, you put it in functions.

[00:24:44.750] - Doc Pop

PHP and I think that's really the standard way you would add that little chunk of functionality. So for us, that's a really good niche to fill, because we don't need to take the burden of creating these plug in, these massive plugins that, number one, are expensive, but number two, they're also dangerous. I think the amount of code that goes into them exponentially increases the likelihood that something could be wrong and there could be some negative part of that plug in. And then also we had built the ability to export a Zip and import it into WordPress. And I think it inspired a lot of people to kind of just download it and upload it and then see a white screen of death. So doing it that way wasn't the most safe mechanism. And that was early days when we didn't have any kind of security vetting or multilayered prompts or prompt categorization or even fine tune models for specific use cases. So I think doing it as a plug in right now is difficult. In the future. It'll get technically easier. It'll get more accurate. We might get to the point where you are really creating an entire plugin based off one prompt.

[00:26:08.050] - Doc Pop

But I don't really see that in the near term in terms of feasibility or even something that I personally would want or would want to build.

[00:26:22.490] - James LePage

Anthony, I'll let you ask another question, but I'm going to just kind of mention that there's another tool I found recently. I guess kind of similar to code. WP. It's called Wpcodi by Wpcode box. And it is a snippet generator. AI powered. I hadn't heard as much about this one, but it is still, I guess, kind of in beta. It says at the bottom, WP Cody is in alpha stage. The code it generates shouldn't be used in production. And that kind of brought to mind a concern that I think Chris Wigman was talking about recently, where you're in New York City, we can tell it's quite noisy out there.

[00:27:05.740] - Doc Pop

Yeah. Can you guys share that?

[00:27:07.100] - James LePage

Yeah, but yeah. He mentioned on an episode the possible risk that could happen if you use AI code with WooCommerce, you might accidentally expose something that puts customers at risk. You mentioned you have a security review team. Can you kind of tell us a little bit about the risks that people could have by using a tool like this and how you all are addressing those?

[00:27:34.050] - Doc Pop

Yeah. So first shout out to Wpcody. I know the developer. WP code Box is the core product. If you buy that product, you get access to WP Cody. And they were actually, before they were around before me. That product is kind of what I was talking about in terms of the paid Snippet management plugins, that's one of them, and it's probably the best, in my opinion. So we love them. Getting back to the security and concerns there, there are a lot of different rabbit holes we could get down. I think if you were to prompt the GPT-3 API and say, I want this functionality that sends this data this way, or I want this functionality that updates my database, I think the database example might be better. It updates my database. I want to remove these keywords and do this and do that, and it just spits something out and you take it and you install it, and your website is destroyed. And hopefully you took your database back up before that. I think with the core models, that's probably what would happen. And they're getting better. And especially if we go back to Chat TPT, it's being trained against that.

[00:29:00.480] - Doc Pop

But that's kind of what we did with Cowp to the Max. It became really apparent in the beginning that there were security holds. The Snippets weren't, for example, kind of sanitizing inputs. If we built a form you could just inject JavaScript somewhere, it was because the models were untrained and unbiased towards security. So what we ended up really focusing on in the beginning is a process of instead of just taking this prompt and saying, okay, API, make this, we have a pretty interface with a button that says, make prompt and spits the prompt out. And now you have the prompt. We first built the platform to kind of inspire people to test and inspire people to review the code. And if they didn't understand the code, send it to a developer, or ask our 24/7 support, which is most of the time, me, what does this do? And then as the platform grew into something that was definitely more of a commercial, widespread product being used by people who weren't just developers, we took a step back and we were like, all right, let's rework the prompts. Instead of just maybe getting the right prompt 90% of the time, let's really focus on inspiring the model to always give the secure code and always create these good Snippets.

[00:30:30.020] - Doc Pop

So what that looks like now is a process that goes on when you submit the prompt to when the prompt is generated. And the reason I said it's expensive to do these things is if you look at the pricing, it it's probably a couple cents per generation if you just spit it in there. But for us, we're doing maybe eight or nine generations to get you to the Snippet, depending on the on the prompt that's that's given. So if you input your prompt into the platform, we use OpenAI's cheaper models to analyze the prompt and say what's the prompt doing? What's the end goal here, and how do you think it should be done? And then we check it with GPT-3, which is kind of a more accurate, creative, but logical model, and we manipulate the prompt from that one sentence into multiple instructions and multiple prompts in addition to that. Sometimes we translate, sometimes we choose to kind of just spit it through. If it's one of those boilerplate, create this post type. But if it's determined that this is a complex generation that needs multiple steps and kind of a thought process here, we create this overarching recipe for the Snippet.

[00:31:52.120] - Doc Pop

From that, we can route it to a custom fine tuned model. And I forgot to mention that we have a general mode, which this applies to. We also have fine tuned modes specific to different plugins. So WooCommerce is a good example here. The WooCommerce mode is a fine tune mode based off of GPT-3, and it uses all of the Snippets that we had created in the agency to fine tune it and give it additional context and information. Here's what a proper Snippet looks like. Here's what the code should do. Here's how the code should act. So if you're choosing that mode, it's much more tailored towards that specific product output. It understands in a sense, what you're looking for and how that product works and how the code should work from that. In the generation process, we have either two routes. One route. Could be you're just creating a blanket snippet based off of these instructions that we've generated, or we're going to chunk it even further and kind of create multiple pieces of code, then take another layer of the AI, understand what that code does, and then kind of morph it back into one big snippet that all works together to fulfill your needs.

[00:33:19.310] - Doc Pop

Then once that's done, we run it through another check that says, is this Snippet secure? Look at escaping, look at X, Y and Z, all of these different concepts. And if you instruct the model to do that and it's fine tuned on kind of the security best practices, it really gets you to a piece of code that will work and will be secure. Now, where it becomes a problem is we have great outputs, but the quality of the prompt now is the biggest roadblock if you don't know what you want and if you don't really know how to ask it. You'll get code that works and kind of does something, but it might not be exactly what you need it to do, which is kind of just the premise of AI and why when you register for the platform, there's your onboarding video, there's disclaimer saying you have to test this, you have to read the code. If you don't know how the code works, go ask somebody who does. We have support, we'll refer you to developers. And that's all stemmed kind of from users using the platform and us kind of watching what happened with the code in the early days.

[00:34:31.830] - Doc Pop

So that's kind of the process. And I think that there's a really big focus on the security implications here just because it's so important and also because it's not just our community users anymore. It's people who may be developers, but they may be somebody who's looking for a solution and isn't a developer. So there's a lot of thought process and a lot of layers to the generations that we're making now. And I mean, the upcoming models that are going to replace these existing ones are even more complex and honestly more expensive for us. But I think it all moves towards just a better product for everybody and something that more people will be comfortable using in the future.

[00:35:25.210] - James LePage

Code WP is not a no code solution. The expectation is that people either understand code and can review it themselves or that they submit a request for you all to kind of verify it. Am I understanding that part, the latter part correctly?

[00:35:44.600] - Doc Pop

Yeah, we offer the support so people can kind of ask questions. And a lot of it, and this is something that's kind of just popped up, a lot of it becomes pointing people towards other plugins development teams and saying this is probably a legitimate request that you want to make to this company instead of trying to figure it out on your own. But I mean, it really boils down to creating code that's usable by everybody. But at this point we suggest that you really understand the code and emphasize that on the platform registration. And also every time you open the generator, understand the code. If you don't understand the code, here's a link to codable. I mean, there's a lot of resources out there, something that we've also been trying to do, which is difficult because we're not the biggest team, we're not venture backed. This is from the money from Isotropic went into the initial development here and now it's somewhat supporting itself. But it's not one of those startups in the sense that we can burn $100,000 a week. Something that we are working on now is educational series on best practices for prompting, how to completely test these snippets video series, all of these different kind of educational pieces which will allow people who aren't developers by trade to really make the best use of these tools.

[00:37:19.000] - Doc Pop

So that's a pretty high priority now and it's become a high priority as more and more people begin to use the platform, which I mean, we're seeing exponential growth because people love it and it really helps people with their day to day creation in WordPress.

[00:37:41.010] - James LePage

Anthony, I've been hogging the mic. Do you have some questions here?

[00:37:47.270] - Anthony Burchell

One of the things that kind of sticks out to me is that you're doing and I was curious about this is because every prompt is like two or three more questions away from a perfect answer and it seems like you all are doing that where you get that initial response. You run models that are lower end models to parse that and kind of make decisions based on it. And it reminds me a lot of this AI system that I've been using called Magic ML. And it's like a node based editor where you can say based on different logic. You just drag these wires out and you connect different pieces of the AI together so that you could do, like, multi shot queries where you say, send this model to Curie and give me sentiment analysis and then send this final response to DA Vinci and give me the actual response. And then maybe run sentiment analysis on the final response and send back what the agent is feeling. So just shameless plug for this project that I've been really excited about, it's called Magic. I would highly recommend taking a look at it because it's really cool because it's just like a graphical editor where you can kind of build out they call them spells.

[00:39:01.350] - Anthony Burchell

So every spell is like an endpoint that gives you a response and you can kind of build out these really complex logic pieces, even down to like python. You can run python from it. It's got like JavaScript parsers in the middle of every shot so that you can take the strings of response and then parse through it and do things with it and then send that out somewhere else. It's really cool. They did some really interesting stuff with Discord Voice bots where they were sending to a voice model to respond back with the wave file of it speaking the response back and then it shoots it back in the final in like discord voice. This is really cool how fast people are building because this project was started like maybe a year ago. So it's really cool to see everyone kind of working together and building cool things.

[00:39:55.470] - Doc Pop

Yeah, I think it's such an interesting industry because I've never seen something move this quick. Every other week there's something new and something new. So it's probably honestly out of fashion at this point. But last week it was change request, as you just said. And I think kind of the code equivalent to that was Lang chain, which I believe is for Python. And it does the same thing. It creates these kind of everexpanding change requests to get you from point A to point B. The models aren't incredible or they are incredible, I mean, looking back a year ago, but they could be better. But to get to the outputs that we want now, creating change requests using AI is probably one of the best ways to get there with the best models we have, which is really GPT-3. There's really nothing competing with it at a high level, but I think that thing was something that was super popular last week and now this week it's embeddings and now this week it's this and that. It's really cool how quick it kind of moves forward. And I'm seeing just things that we've been working on with the chaining and stuff kind of becoming more accessible to more people.

[00:41:15.580] - Doc Pop

And it's cool to see that our ideas are kind of what the rest of the people are doing. And it's also cool to see it becoming a lot more accessible to people, even though you do need to use code in most situations. It's just so interesting how quick this industry moves and eventually GPT Four is going to come and that's going to be something even crazier and it's just ever moving forward. It's really cool.

[00:41:48.370] - Anthony Burchell

Hey Doc, can I share my screen and just show this?

[00:41:51.480] - James LePage

Go ahead.

[00:41:52.150] - Anthony Burchell

Because I think this will help visualize what we're talking about here because I think people are hearing like chaining and multi shot and all of that. So this is the open source project I was talking about.

[00:42:04.470] - James LePage

1 second I got to create. How do I show it? Well, I guess I can just do.

[00:42:15.530] - Anthony Burchell

So this is called magic and you can create. They have all of these components that can do different things. Like you can do logic based stuff with the strings that are coming in from the different shots of data. So when I send a message here, I'm just going to shoot one through. It's probably not going to work because I don't have my open AI key input. But the idea is that your text comes through on the screen one and it goes through all of this logic to then get to the very final end response. But in this logic is things like memory recall. This is a really cool feature where you can say if a speaker to the chat agent said. Something that is worth writing down a fact about them to recall later on. It'll deduce that it's a fact about somebody and write that so that the next time that person comes through they can say, oh, I remember you. You're that person that drives Lambo or whatever. It just remembers facts that are relevant to who you are. You got all of these different logic switches that come in that can based on all kinds of data.

[00:43:29.270] - Anthony Burchell

It's really deep, but it's got these things like greeting data, it's got goodbye data, it's got troll data so it can figure out if you're trolling the agent so it can handle trolls in its own spell. It's got end for good buys. But these are just like super open ended, where you can create all of this very complex logic and wire it up however you want it to happen, because it's based on an input trigger of a trigger that someone sent a message and also their message. And those two pieces of data just get sent through the chain and get handled. But yeah, I thought I'd show this off because it kind of visualizes how all of this logic is kind of going through the system and being parsed.

[00:44:12.170] - Doc Pop

Yeah, I think that's a beautiful representation of kind of what everybody's talking about and I'm definitely going to have to check that out just to show others what it is because I think it's very complex when you're speaking it, but if you can see it, it's like, oh, it's kind of just a thought process. It's thinking about what do they actually want? And then from what they want, how can I do it? And then from how I can do it, maybe a couple more steps and there's a lot that goes on. But if you can boil it down like that, I think it becomes a lot easier to understand kind of the core concepts of just what the most up to date implementations of AI are doing now to get from that one thing to the other thing.

[00:45:00.960] - Anthony Burchell

Yeah, totally. This project is really cool. It's been awesome to see how many people are jumping in and contributing to it. And they got stable diffusion in here. So if you have stable diffusion running on the same server as this, it can generate images and pass those back in the rest response. So it's really open ended. You can even hook it up to Telegram. I've got a cell phone for one of my entities right now so that I can text them whenever I want. Stuff like that. It's so open ended right now. It's a really interesting time. I think even with all of the bad in AI, there's so much good that even like the smartest people I know are just raving about the things that they're able to do with this. And they're not saying absolutely AI is what we're going to depend on, but they are saying like, hey, I can get my work done maybe 15% faster now because I don't have to go figure out how this framework is configured. That's pretty good, especially when it's trained on documentation that those frameworks want you to have anyway and understand anyway. That's the stuff where I feel like that's super ethical.

[00:46:05.790] - Anthony Burchell

That's exactly what these people want. They want their documentation out there and they want people to learn. I don't think it's so much that no one's going on Chat EPT and saying, I want to make a competitor for X plug in and I'm going to take them down because my AI version is going to be so much better. Like, no, we're just trying to get one problem solved at a time so that we can move on to the next thing that we're really good at. Interesting time.

[00:46:36.750] - Doc Pop

It's really crazy, I think the impact that AI has already had, how quickly it's had it, they kind of just released it, especially with Chat EPT, it didn't exist and then everybody was using it. They had a million users in like five days. So it's really just crazy how impactful it's already been. And every day these new papers are coming out with, oh, this method of processing this minute chunk of data when it runs through this pipeline of layers of neural network will result in this much better output. And every day these academics are releasing it and it's only a matter of time until it hits kind of the public market and it's really just exponentially increasing in terms of what you can do with this technology and how impactful it is now and will be in the future. It's really an interesting time to be a part of, but also a lot of thoughts coming out of that of how will this impact everything, because it definitely will.

[00:47:41.890] - James LePage

Yeah, we talked a little bit about how the code is trained and I'd like to dive more into that. That's one of the issues. So AI could be anything from like AI detecting red eyes and photos. AI is used for that. It could be used for all sorts of stuff. So when we say AI, it's a broad thing. But I think one of the kind of common issues that comes up with AI when a lot of people think about it is how the data is trained. When they're thinking about visual like dolly or stable diffusion, they're thinking about artists whose work was kind of trained on that without their permission. And then we think about Copilot and that's a system that GitHub has where I think people opted in or maybe you have to opt out, but still, like Microsoft said, hey, we're going to be using your data to train this. How are you collecting your data and is there a license that's involved with that that you're passing on?

[00:48:37.270] - Doc Pop

Yeah, so this is a really interesting question and it honestly wasn't a question that we were thinking about up until maybe the beginning of last month. And that was just because it went cowp went from this is a cool project community habit, to there are thousands of users creating hundreds of thousands of Snippets. This is impactful in WordPress and elsewhere. So the first question is, where is the data coming from? And the answer to that is from examples that we have generated isotropic agency, and then also examples that users rate good once they create them. And then all of that's being layered on top of a core GPT-3 API. And also cohere. But assume GPT-3. And for those who aren't familiar, cohere is just another solution, just like GPT, three's API. So we take all these examples and then we fine tune GPT-3 to say we want the kind of logic and creativity that you have. We also want the ability that you have to understand prompts, which is a lot better than Copilot, who's using Codex, Open, AI Codex. So we want these features, but we also want you to output code, and we want you to output code like this formatted like this, similar to what I was talking about when we were discussing security.

[00:50:14.630] - Doc Pop

So that's kind of the core premise there. And then on top of that, so the fine tuning of a model allows it to create more tailored outputs towards the use case that you want. On top of that, though, we also use Embeddings, which when somebody shoots a prompt into the platform, it's embedded into a huge string of numbers called a vector. And that vector, I don't know how to easily explain this, but it represents kind of what that prompt means. And then from all of the other good rated prompts on code WP that were then approved by myself or my developers as good code, we take that and we match it. And if it's a 95% or up match, we also embed that as context into the model and we say this is good code. While the request might not be exactly for this Snippet, it's a good example of what we want. And that kind of really creates a self learning system that's ever improving from the community and gets us to that output. So if we look at kind of where the data comes from with GPT-3 in general, that's Commonwealth scrape, I believe it's like 45 terabytes of data and it gives it its logic and reasoning and here's why I do what I do, and that's what actually ends up being kind of condensed down into parameters.

[00:51:50.610] - Doc Pop

So everybody talks about GPT-3 has 170 something billion parameters, but four will have a trillion. That's what they mean by that. It's all the data condensed down into here's the decision that I'm going to make in this specific use case. Hopefully that's not as complex as it might sound.

[00:52:09.770] - Anthony Burchell

Yeah, maybe I'll say it back to you, see if I understand it right. One example is I've hit Chat GPT and I've asked for a Rest API input that changes data in the database and it of course gave me back something that didn't check for permissions or anything, it was just free access to the database. So in that scenario your system would do something like show registering a Rest API input that does do the permissions check or whatever it is, so that when it's asked that question, it can compare it against that. Is that my understanding? It right like that?

[00:52:46.710] - Doc Pop

Yes. I mean, in the terms of cowp, yes. And it's pulling kind of from the training that we've given it, which gives it like that last layer of here's really what we want. You can use your logic and reasoning and essential knowledge that you have from your general model, but at the end of the day we really want you to kind of bias towards this type of code and we want it to be code, we don't want you to output text or whatever. And kind of from that fine tuning, it gives us the premise of it's going to output good WordPress code. And then on top of that, when you take a Snippet, a full Snippet that is 100% vetted, really secure, well coded, it works and developers kind of reviewed that and embedded directly on top of the prompt and the training, it pulls even more from that. And because it's related to kind of the question it pulls from that and it says, oh, I see that they're doing kind of the permissions callback towards Kent Edit or something like that. And because of that I'm going to do the same thing and kind of pairing those two things together makes it output what we want.

[00:54:13.710] - Doc Pop

And I think, I don't know if this is linked in a description or something, but we had mentioned that should WordPress users use Chat TBT article that I published and I kind of COVID some of the issues that a lot of people are facing with that chat TBT. It's a really big platform. It covers everything and because it covers everything, it's really good at everything, but not great at one specific thing. And that's because it's not fine tuned in those specific industries. So WordPress specifically, it's pulling kind of from this humongous understanding of everything, but it's not as if it's studied WordPress and it's not as if it's like, oh, these are the best practices in this specific situation. So if you put in I want a Rest API endpoint to hit this custom post type or whatever it is, it'll output kind of what it thinks it should output, but not what it knows it should output. So that's where I think the embeddings and fine tuning and essentially teaching it what it needs to know comes into play. And I kind of covered that in the article on Isotropic, in that if you fine tune it, it gets really good but because it's not finetuned and because there's no real like disclaimer there, it's a lot tougher to create really good code from it.

[00:55:42.510] - Doc Pop

So I think that's an issue. It's not just with Chat GBT, it's just because Chat TBT is so accessible to everybody and so viral, it manifests itself there. But if you were using OpenAI's Playground interface to access their API a year and a half ago, it's the same problem. It's just not fine tuned, it's not taught. It's not like it went to school to learn how to properly develop a rest API endpoint. So that's kind of where focusing needs a little help.

[00:56:15.830] - Anthony Burchell

I found one of the cooler things that I've done that was pretty interesting is I fed a Chat GPT session a block JSON file, and this was a custom block that I made. And I just said, here, explain this block back to me. And with just the context of the JSON and it understood, even though my block was a 3D block and like a 3D renderer, it completely understood. The concept of a block has attributes, and these attributes are describing what it can do in a 3D world. And it was able to respond back to me. I was making an NPC block and whatever, it explained back all my attributes. And then from there I was able to say, well, okay, now write a blog post about that so that I can just at least have a frame of talking about this. Then in that same exact conversation, I was able to ask, well, knowing what you know about the block JSON, write me a new block JSON for a new block that does X thing. And it was able to kind of build a new one based on that. And I found that that flow of iteration was really, really nice.

[00:57:20.760] - Anthony Burchell

And I could see that being sort of similar to what you're doing there with the snippets coming through, you start parsing through and adding more functionality until you get this final thing that just does what you need. It's really fun.

[00:57:33.970] - Doc Pop

It's very similar. And I think a lot of people who use Chat TBT for development are seeing, oh, if I give it context, if I say, here's my project, here's what I'm working on, here's what I need, I need it to be secure. Here's an example that I found on Stack Overflow that had 400 upvotes. So I know it's probably right, do this, do something similar to this, that's in essence what we're doing on a larger scale with cowp. And when you do that, you really see the model's. Understanding of everything shine because it can pull in, as you were saying, maybe like a 3d library, because it understands that, but it also understands WordPress. And it's been given special information about the specific situation to allow it to use its creativity and lots of reasoning to its biggest effect. And I think we're going to see that a lot more, not just with products like Kowp, but with the general models themselves. When you add more parameters, they have more information and more ability to reason in a specific industry and get you what you need. That is correct.

[00:58:41.830] - James LePage

I want to go back to this because I'm not sure that I've fully understood in terms of how this is trained. You said you start with the code that Isotropic wrote, and I want to confirm that Isotropic to train this data is not like scraping the WordPress repository. Like the wordpress.org. There's no scraping there no in the.

[00:59:05.010] - Doc Pop

Beginning we thought that it would be really cool if I could just take a plug in and go on the repository, download it and then upload it and the machine would learn. And that's not really ethical. I think if I were to publish a plug in, I wouldn't want that. So the models that we have now, all of the live models are trained on multiple iterations of similar and different code snippets for that specific use case created by us. In the future, we're looking at incorporating instead of using those Snippets, how can we incorporate code bases from plugins that have given us permission to use those code bases, which we have a list of around twelve who have said, yeah, we're fine with that. But then, and I know we're out of time, then it becomes a conversation about this is GPL code and it's probably going to output something similar to that. So where does that leave us? And I think there's a lot of conversation to be had around that too. It's very interesting. Right now what we're doing is not really in the gray area, but in the future to create the products that our customers want, we're going to have to figure something out to that.

[01:00:25.960] - James LePage

I know we are at time, but there is a stipulation matt Mullenweg has in WordPress that all derivative works must be licensed as GPL. And I don't know how exactly that's always been a thing that sounded a little bit less like an official thing, a little bit more like a nice to have kind of thing. I don't know if that's a thing you can do, but Anthony, if you have more knowledge of this, feel free to chime in. But would that mean that if these are for WordPress, and all derivative works for WordPress, not just code that was licensed, but anything built for WordPress's GPL, does that mean that this should be GPL as well?

[01:01:06.300] - Anthony Burchell

I think it does. If it's derivative off GPL, it needs to be GPL. And that's sort of why in my stuff, I've been only using this for GPL stuff. Like I have not once used chat GPT for work that I'm doing in my day job, but in my afternoon projects I'm like all of my work right now is open source and GPL, so it doesn't feel as wrong and especially considering a lot of my questions are about core functions. So it's WordPress core things that I need. So I'm just using WordPress core. I'm not trying to write my own WordPress, but I think when you start getting into this, like, I don't know if the Word is competing functionality or if it's like when you're generating code that's almost identical functionality as something, I think it needs to be licensed GPL at least. That's the safest thing to do, in my opinion.

[01:01:59.990] - Doc Pop

Yeah, and I think it's a really interesting topic because it's really the Wild West in that this showed up and now we're trying to figure it out. And I think in terms of WordPress, there's the conversation around GPL and what is derivative work. And it's something that, again, over the past month or so, we've been kind of trying to iron down and really focus on how to navigate this kind of weird gray area that there's no legal precedent. We were kind of banking on the Copilot lawsuit, which looks like it's probably not going to happen, which is honestly horrible because we need precedent. Like we need to know these things. I think where we're at right now is we give the users ownership over the Snippets. The Snippets themselves are not reproducing GPL work. I know Copilot went pretty viral in that it was like word for Word, copying big chunks of people's, open source creations. I think the method that we're using with multilayered prompts and also staying away from Codex and focusing more on using GPT-3, it's creating something that is very far away from something that you will find online. I think with that said, I'm a WordPress creator and in the future there are a lot of options that I think we'll be able to use in terms of kind of being more open with what we're doing.

[01:03:42.920] - Doc Pop

So we're kind of stuck using these big AI companies because we can't afford to train our own models right now. But with that said, there's a lot of kind of movement in the industry, the sub industry of AI and large language models specific to code. And there's a really cool project that I've been really following called Big Code. And Big Code is trained on it's a very similar model. It's trained on a humongous chunk of a lot of code from the Internet. But instead of doing what Copilot and GitHub did, or even, in a sense, what OpenAI did, scraping the entire Internet, what they're doing is sourcing code that has permissive licenses that's above board and giving people the ability to say we don't want code our code to be in this data set and also open sourcing the data set and open sourcing the model and the whole process behind it. So I think that's probably the future of at least co generation that everybody's happy with. And I also think for me, if Cowp grows into a much larger business creating open source models ourselves specific to WordPress is something that we would love to do, but again, we don't have the resources to be training models.

[01:05:09.180] - Doc Pop

I think GPT-3 costs $14 million or something to train, and we only have a couple of developers who barely get paid enough to do what they do now. So I think it's a really interesting industry. And again, I'm not a lawyer and I don't really know that much about it. We're talking with lawyers to try to figure out what the licensing should be. It's so interesting because it really happened so fast. The industry hasn't picked up in code, but also in art and everything else. Very interesting and big topic.

[01:05:48.990] - James LePage

Well, I really appreciate you chatting with us, James. It was nice for us to stop speculating about what's going on at Cowp and be able to talk to you about it and hear your thoughts on it. I know there's a lot of questions we didn't even get around to, but if people want to learn about what you're working on, what's a good way for them to follow you?

[01:06:10.630] - Doc Pop

Yeah, you could follow me on Twitter, which I recently got at James W LePage. If you're interested in Cowp and AI generation for code and WordPress in general, go on Facebook and join the Cow group. It's not just for users of the platform, it's for people who are interested in what we're doing, but also kind of what we think should happen and also discourse just around what's going on now. So I'd say if you're interested in that specific aspect of it, go join that group. Also, Cowp is free for ten generations a month. You can do really complex stuff with it. So if you want to see what it is and how it works, go check it out. And that's really what I have. I'd be happy if there are a lot more questions that we can really dive into. I'd be happy to jump on in a few weeks and chat about that as well. This is something that I really find personally interesting, and I know a lot of other people find it interesting too, just to kind of think about, because, again, there's no precedent really, with any of this in any aspect of AI generation.

[01:07:22.180] - Doc Pop

So talking about it, I think, is the way to go. So, yeah, that's how you can follow me. Those are my thoughts and I'm super glad to have been invited on this podcast.

[01:07:35.080] - James LePage

Yeah. And Anthony, if people want to follow you, what's the way to do that? Or you're muted, I think, at the moment. Well, Anthony is at Twitter Comantpbantpb, where he is often talking about his WordPress plugin that allows you to build a metaverse within WordPress using posts as rooms. Posts or rooms. I remember that. So this is the end of the Torque Social Hour, the weekly livestream that we do about WordPress news and events. You can follow us at the Torque Mag on Twitter. That's thetorquema. Or you can go to Torqmag IO where you can see these episodes as well as our press, this podcast and all our tutorials. Thanks, everyone for thanks to James and Anthony for joining us as a great conversation and we will see everyone next week.